RACONTEURPH

Stories worth sharing

NVIDIA Introduces Innovations, Strengthens Partner Ecosystem to Shape AI Future

POSTED BY: Lionell Go Macahilig2025-06-02 12:09:33 PHT

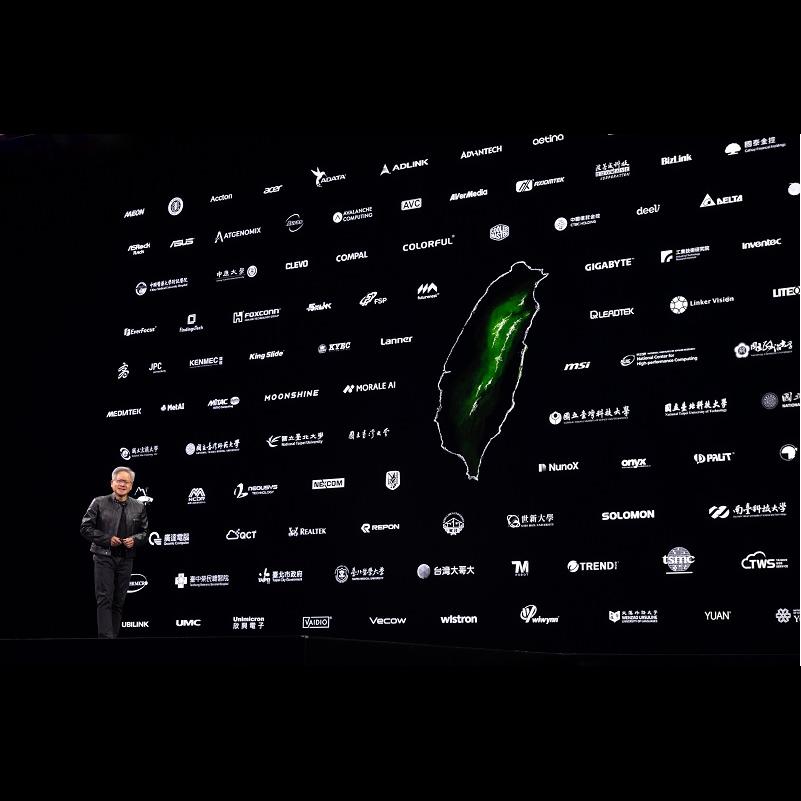

At his NVIDIA keynote at COMPUTEX 2025, NVIDIA founder and CEO Jensen Huang unveiled partnerships and advances across artificial intelligence (AI), quantum computing, cloud services, and data center infrastructure to accelerate AI development and adoption.

The NVIDIA keynote climaxed with Huang's announcement of NVIDIA Constellation, the company's planned headquarters in Taiwan, set to rise in the Beitou-Shilin Technology Park in Taipei. The decision to establish NVIDIA Constellation in Taiwan highlights its skilled workforce and robust semiconductor supply chain. The facility is anticipated to attract talents from different parts of the world.

Alliance to Build AI Factory

NVIDIA and Foxconn Hon Hai Technology Group announced they are strengthening their partnership and are working with the Taiwan government to build an AI factory supercomputer to deliver NVIDIA Blackwell infrastructure to researchers, startups, and various industries.

In this partnership, Foxconn will provide the AI infrastructure through its subsidiary Big Innovation Company as an NVIDIA Cloud Partner. With 10,000 NVIDIA Blackwell GPUs, the AI factory is anticipated to significantly expand AI availability and propel innovation for Taiwan researchers and enterprises. The Big Innovation Cloud AI factory will feature NVIDIA Blackwell Ultra systems, including the NVIDIA GB300 NVL72 rack-scale solution with NVIDIA NVLink, NVIDIA Quantum InfiniBand, and NVIDIA Spectrum-X Ethernet.

Meanwhile, the Taiwan National Science and Technology Council will use the Big Innovation Company supercomputer to deliver AI cloud computing resources to the Taiwan technology ecosystem to expedite AI development and adoption across various sectors. Additionally, researchers from Taiwan Semiconductor Manufacturing Company (TSMC) will utilize the supercomputer to advance their research and development.

Enabling Industries to Build AI Infrastructure

NVIDIA is enabling industries to build semi-custom AI infrastructure with a new silicon called NVIDIA NVLink Fusion. MediaTek, Marvell, Alchip Technologies, Astera Labs, Synopsys, and Cadence are among the first to adopt NVLink Fusion. Using this silicon, CPUs from Fujitsu and Qualcomm can also be integrated with NVIDIA GPUs to create NVIDIA AI factories.

NVLink Fusion also enables cloud providers to scale out AI factories to millions of GPUs by using any ASIC, NVIDIA rack-scale systems, and the NVIDIA networking platform, which delivers up to 800Gb/s of throughput, featuring NVIDIA ConnectX-8 SuperNICs, NVIDIA Spectrum-X Ethernet, and NVIDIA Quantum-X800 InfiniBand switches, with co-packaged optics available soon.

Accelerating Transition to AI Factories

To help the trillion-dollar IT infrastructure transition to enterprise AI factories, NVIDIA introduced the RTX PRO Servers and a new NVIDIA Enterprise AI Factory validated design for building data centers. With NVIDIA RTX PRO 6000 Blackwell Server Edition GPUs, RTX PRO Servers enable data centers to take advantage of the performance and energy efficiency of the NVIDIA Blackwell architecture.

Meanwhile, with the NVIDIA Enterprise AI Factory validated design, partners can build a new class of on-premises infrastructure, featuring RTX PRO Servers, NVIDIA Spectrum-X Ethernet networking, NVIDIA BlueField DPUs, NVIDIA-certified storage systems, and NVIDIA AI Enterprise software, to speed up product design and engineering simulation applications.

AI-First DGX PCs Roll Out

At COMPUTEX 2025, NVIDIA announced that Taiwan's leading system manufacturers will build NVIDIA DGX Spark and DGX Station systems to empower developers, data scientists, and researchers worldwide.

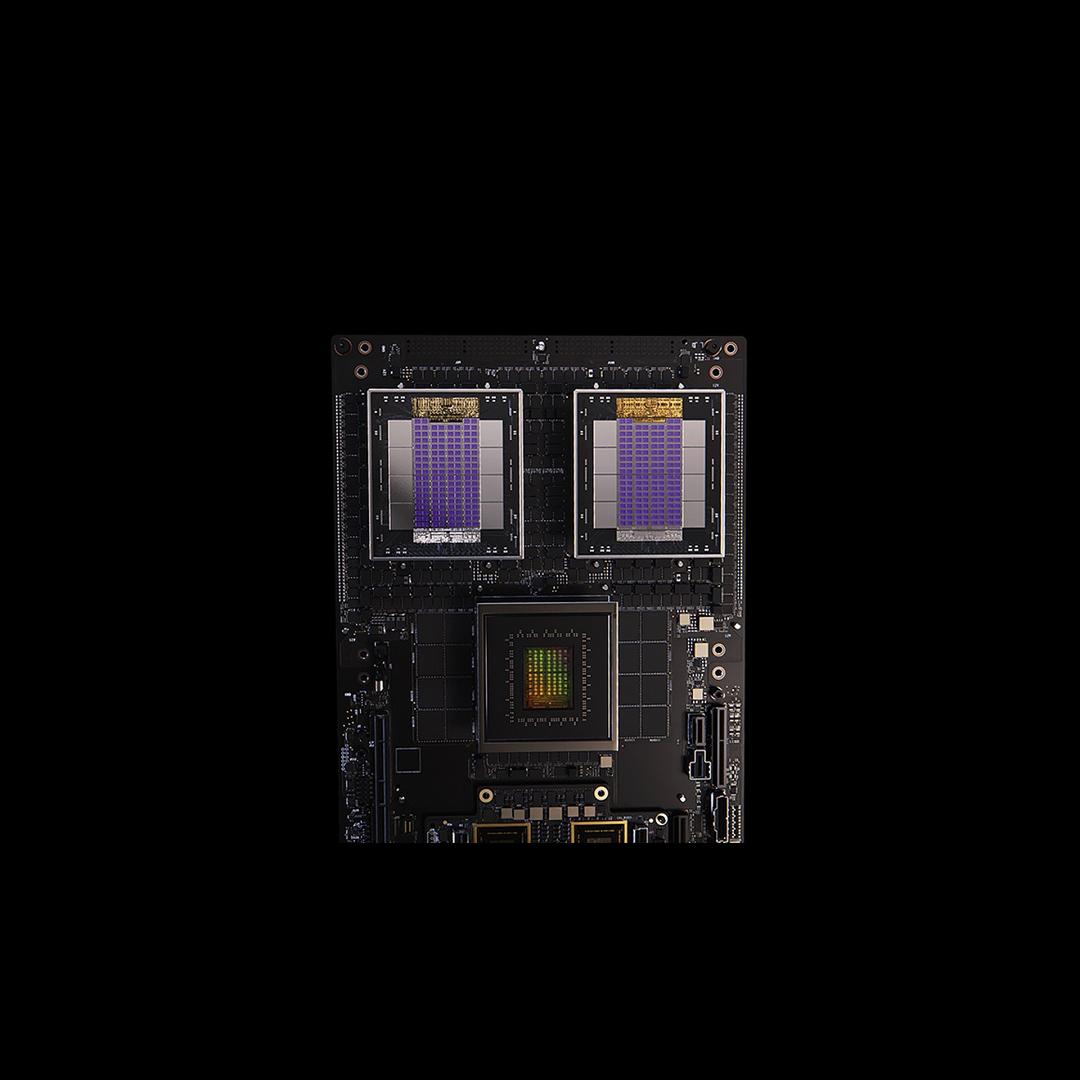

With the NVIDIA GB10 Grace Blackwell Superchip and fifth-generation Tensor Cores, the DGX Spark delivers up to 1 petaflop AI compute and 128GB unified memory, enabling seamless exporting of models to NVIDIA DGX Cloud or any accelerated data center or cloud infrastructure. DGX Spark will be available from Acer, ASUS, Dell, GIGABYTE, HP, Lenovo, and MSI starting in July.

Meanwhile, the DGX Station features the NVIDIA GB300 Grace Blackwell Ultra Desktop Superchip, offering up to 20 petaflops of AI performance and 784GB of unified system memory. The DGX Station also comes with an NVIDIA ConnectX-8 SuperNIC, which supports networking speeds of up to 800Gb/s for high-speed connectivity and multi-station scaling. DGX Station will be available from ASUS, Dell, GIGABYTE, HP, and MSI later this year.

Powering World's Largest Quantum Research Supercomputer

NVIDIA also announced the opening of the Global Research and Development Center for Business by Quantum-AI Technology (G-QuAT), which hosts ABCI-Q, the world's largest research supercomputer for quantum computing.

Delivered by Japan's National Institute of Advanced Industrial Science and Technology (AIST), the supercomputer has 2,020 NVIDIA H100 GPUs interconnected by the NVIDIA Quantum-2 InfiniBand networking platform. The system is integrated with NVIDIA CUDA-Q, an open-source hybrid computing platform for orchestrating the hardware and software needed to run quantum computing applications.

Marketplace for AI Developers

Finally, NVIDIA announced DGX Cloud Lepton, an AI platform with a compute marketplace that connects developers building agentic and physical AI applications with tens of thousands of GPUs available from a global network of cloud providers.

NVIDIA Cloud Partners CoreWeave, Crusoe, Firmus, Foxconn, GMI Cloud, Lambda, Nebius, Nscale, Softbank, and Yotta Data Services will offer NVIDIA Blackwell and other NVIDIA architecture GPUs on the DGX Cloud Lepton marketplace. Through this marketplace, developers can tap into GPU compute capacity in specific regions for computing to support strategic and sovereign AI requirements. Developers can now sign up for early access to DGX Cloud Lepton.